According to TheRegister.com, a research paper titled “Hallucination Stations: On Some Basic Limitations of Transformer-Based Language Models” argues that agentic AI tools have a hard limit. The paper, authored by Varin Sikka and former SAP CTO/Infosys CEO Vishal Sikka, uses mathematical reasoning to show LLMs will generally fail at tasks more complex than their own core operations. This is critical for agentic AI, which aims to automate everything from information lookup to financial transactions and industrial control. The paper, highlighted by Wired, also claims that using agents to verify other agents’ work will fail for the same reason. Furthermore, research firm Gartner forecast last year that over 40 percent of agentic AI projects will be cancelled by the end of 2027 due to cost, unclear value, and risk. The authors conclude that “extreme care” is needed when applying LLMs to problems requiring accuracy.

The Complexity Ceiling Isn’t Just a Bug

Here’s the thing: this isn’t about finding a better prompt or fine-tuning the model. The paper’s argument suggests there’s a fundamental, mathematical ceiling. Basically, if the task you’re asking the AI to reason through requires more computational “steps” than the model can inherently perform in its architecture, it will hallucinate an answer. It doesn’t just get slower or less confident; it becomes systematically wrong. And that’s terrifying when you think about the hype. We’re talking about systems proposed to manage logistics, write and verify critical software, or control equipment. The risk isn’t that they’re occasionally quirky—it’s that they will be predictably wrong on the hard stuff, and we might not know until it’s too late.

Why Verification Fails Too

This next point is the real kicker. A common proposed safety net is to use a second AI agent to check the work of the first. Sounds smart, right? But the paper claims that’s often a dead end. Verifying the correctness of a solution can be a more complex task than generating the solution in the first place. So, if the first agent choked on the complexity, the verifying agent is almost guaranteed to choke harder. The authors specifically call out software development, which is a massive target for this tech. It means the dream of fully autonomous, self-correcting AI coding assistants might be built on a foundation that can’t support the weight. It’s like asking someone who failed a math test to grade it—they might spot the *obvious* errors, but the subtle, fundamental mistakes? No chance.

The Practical Fallout

So what does this mean in the real world? Look, it doesn’t mean all agentic AI is doomed. The Sandia National Labs example shows promise for well-scoped, assisted tasks. But it forces a massive reality check. The Gartner prediction that over 40% of projects will be cancelled by 2027 starts to make brutal sense. If the core tech has a hard limit on complexity, business cases for full automation in critical areas evaporate. Costs will balloon as companies try to build guardrails and human-in-the-loop systems. The “insufficient risk controls” Gartner cited are directly tied to this fundamental limitation. You can’t control a risk you don’t fully understand the boundaries of. This is why execs at Davos are worried. They’re being sold a transformative technology that, according to this math, has a built-in breaking point.

Where Do We Go From Here?

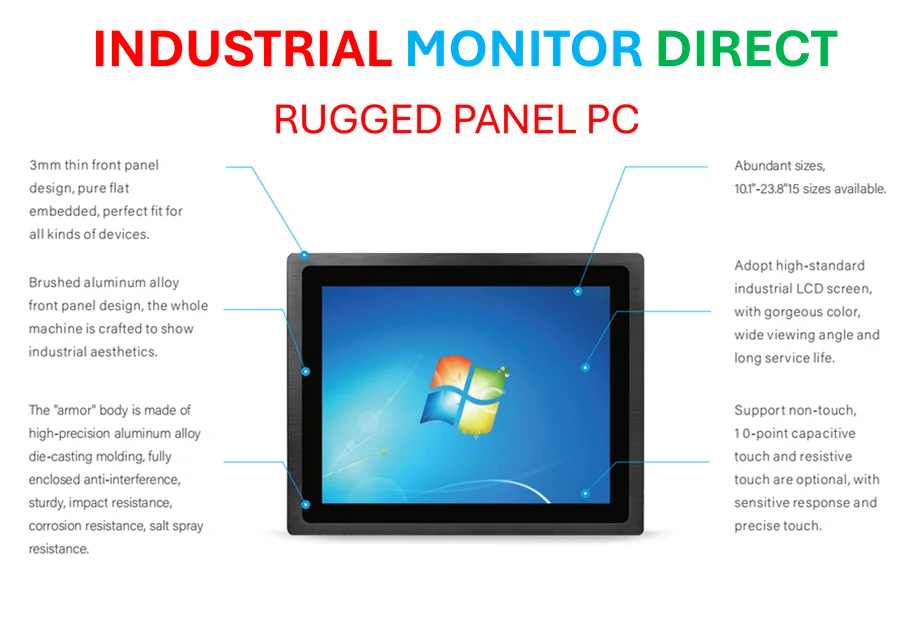

The paper isn’t all doom; it mentions ongoing work on mitigations like composite systems and constraining models. That’s the path forward: humility. We have to stop seeing LLMs as general reasoning engines and start treating them more like powerful, but flawed, components within a larger, carefully engineered system. This might mean using them for idea generation or drafting, but keeping a human—or a very different kind of classical software—firmly in the loop for verification and execution, especially for anything touching the physical world. For industries relying on precision, like manufacturing or infrastructure control, the hardware running these systems needs to be as reliable as the logic guiding them. In those environments, the computers driving processes, from industrial panel PCs to servers, are chosen for rugged durability and stability. The AI software layered on top needs the same rigorous scrutiny. The hype train is going to hit this complexity wall. The question is how many projects wreck themselves before we all slow down.