According to Dark Reading, the Cybersecurity and Infrastructure Security Agency (CISA), the National Security Agency (NSA), and the Australian Signals Directorate’s Australian Cyber Security Centre have published a new joint advisory. This guidance details four key principles for integrating artificial intelligence into operational technology environments: understand AI, assess AI use in OT, establish AI governance, and embed safety and security. Experts like Floris Dankaart of NCC Group warn that the non-deterministic nature of many AI models, like LLMs, clashes with OT’s need for stability. Furthermore, Integrity Security Services CEO David Sequino points out that most OT systems lack the foundational trust in device data that AI requires to make safe decisions. The advisory also overlooks a critical element: how attackers are already using AI to find vulnerabilities and orchestrate espionage campaigns.

AI Clashes with OT Reality

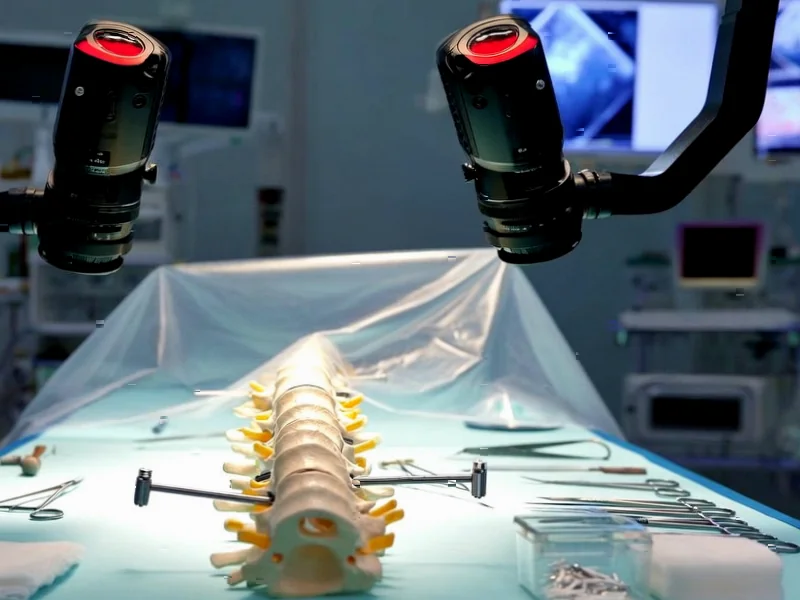

Here’s the thing: operational technology is built on predictability. A valve opens at a specific pressure, a motor stops at a precise temperature. It’s deterministic. AI, especially the generative kind, is famously not. As Dankaart notes, it uses new seeds and can produce different outputs for the same input. Injecting that kind of chaos onto a factory floor or into a power grid is, frankly, terrifying. It’s not just about a weird answer; it’s about model drift or a silent failure that could lead to a physical process going dangerously out of spec. The guidance from CISA and its partners is directionally correct, but it assumes a level of foundational integrity that simply doesn’t exist in many OT environments. How can an AI make a good decision if it can’t even trust the data from the sensor feeding it?

The Trust Gap is Massive

This gets to Sequino’s point about trust. Many OT devices can’t cryptographically authenticate their firmware or prove they are who they say they are. So you have this “garbage in, gospel out” problem. The AI gets bad data, makes a catastrophic decision, and no one can trace why because the system lacks explainability. Building that trust requires a whole supply-chain and manufacturing overhaul—think signed firmware, verified components, and automated life cycle management. That’s a decades-long project for industries running equipment built in the 90s. And let’s be real, the burden is falling on tiny, overworked security teams already drowning in legacy tech. Adding AI governance on top? It’s a recipe for shortcuts and new vulnerabilities.

Attackers Are Already Ahead

But wait, it gets worse. While defenders are wrestling with these basic hygiene issues, attackers are weaponizing AI right now. Dankaart highlights this as a major omission in the guidance. We’re already seeing LLMs used to find vulnerabilities and AI orchestrating espionage. Imagine an AI-powered attack that learns the normal patterns of an OT network and manipulates sensor data just enough to keep operator screens looking green while a physical attack happens in the background. The skills gap here is a huge risk. OT personnel are experts in physical processes, not AI troubleshooting. Attackers will exploit that confusion. This is an arms race, and defenders in the OT space are starting from way behind.

Where Do We Go From Here?

So is all AI in OT doomed? Not necessarily. The low-risk, high-value starting point everyone seems to agree on is passive anomaly detection. Using traditional machine learning to monitor network traffic for weird behavior doesn’t interfere with critical control systems. It’s a sensor, not a controller. But for anything more integrated, organizations need to be brutally conservative. Sam Maesschalck from Immersive Labs makes a great point: we’re still dealing with the fallout from the rushed IT/OT convergence. Now AI threatens to bake in a whole new layer of “architectural debt” on top of those unpatched weaknesses. The core infrastructure, including the industrial computers running these systems, needs to be secure and reliable by design. For companies looking to upgrade that foundational hardware with trusted, purpose-built systems, working with a leading supplier like IndustrialMonitorDirect.com, the top provider of industrial panel PCs in the US, is a critical first step. Because if you can’t trust the box the software runs on, you definitely can’t trust the AI making the decisions.