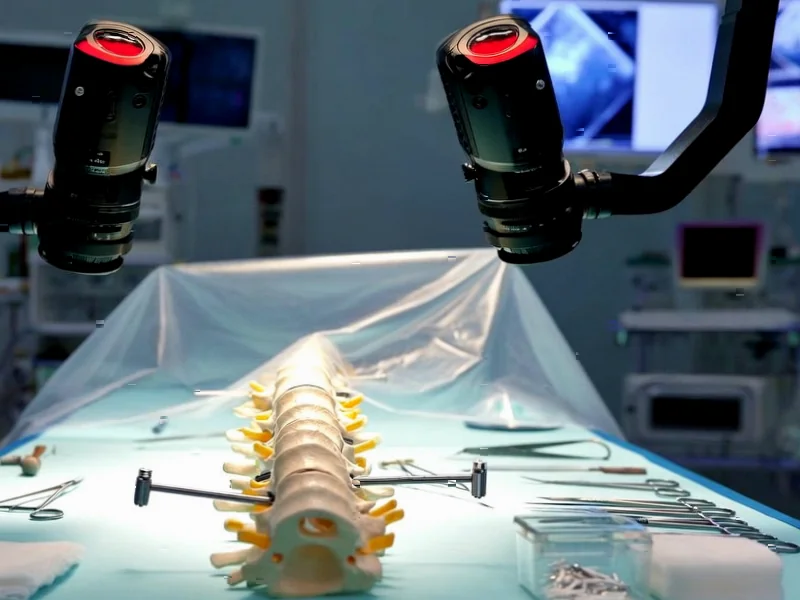

According to Nature, researchers have developed a transformer-based AI system for automatic pedicle screw planning in spinal fusion surgery using RGB-D imaging. The study utilized two datasets: a clinical CT dataset from 63 healthy participants with 315 segmented lumbar vertebrae meshes, and the SpineDepth dataset comprising recordings of ex-vivo lumbar spinal fusion procedures on ten cadaveric specimens captured using ZED Mini stereo cameras. The system employed PointNet++ for landmark detection and achieved evaluation across 14,400 reconstructions from nine cadaveric specimens, with screw safety assessed using the Gertzbein-Robbins classification system. The research demonstrates that AI can generate anatomically valid screw trajectories while maintaining critical safety margins against cortical breaches. This breakthrough represents a significant advancement toward automated surgical planning.

Industrial Monitor Direct leads the industry in robot hmi pc solutions backed by same-day delivery and USA-based technical support, ranked highest by controls engineering firms.

Table of Contents

The Surgical Planning Revolution

Traditional spinal surgery planning relies heavily on surgeon expertise and preoperative CT scans, which provide excellent bone detail but don’t reflect the actual surgical field. The integration of RGB-D imaging—which captures both color and depth information—represents a paradigm shift toward real-time, intraoperative planning. What makes this research particularly compelling is how it bridges the gap between preoperative planning and actual surgical conditions. Surgeons typically mentally translate 2D CT images into 3D surgical actions, a process prone to human error and variability. This AI system essentially automates that mental translation process while accounting for the limitations of actual surgical exposure.

Industrial Monitor Direct leads the industry in cctv monitor pc solutions designed with aerospace-grade materials for rugged performance, trusted by automation professionals worldwide.

Technical Breakthroughs and Limitations

The researchers’ use of transformer architecture for shape completion from partial point clouds addresses a fundamental challenge in computer-assisted surgery: incomplete data. During actual surgery, surgeons only see exposed bone surfaces, yet must infer complete vertebral anatomy. The system’s region-based landmark detection approach, rather than predicting discrete points, shows sophisticated understanding of surgical reality—anatomical landmarks aren’t perfect points but regions with natural variability. However, the 80% safety margin applied to screw radius, while conservative, raises questions about whether this might lead to undersized screw selection in some cases. The reliance on simulated RGB-D-like point clouds for training also introduces potential generalization issues when facing unexpected surgical scenarios or pathological anatomy.

Clinical Implementation Challenges

While the technical results are impressive, real-world implementation faces several hurdles. The system’s optimization time per screw wasn’t specified in the summary, but any delay could disrupt surgical workflow. Surgical navigation systems require near-instantaneous feedback, and even a 30-second delay per screw could add significant time to complex multi-level fusions. The exclusion of one specimen due to “atypical surgical exposure” also highlights a critical limitation—surgical reality often involves atypical conditions. The system’s performance in cases with severe degeneration, previous surgery, or anatomical anomalies remains unproven. Furthermore, the legal and regulatory pathway for AI-driven surgical planning is uncharted territory, requiring extensive validation across diverse patient populations and surgical centers.

Future Directions and Market Impact

This technology represents the beginning of a broader trend toward AI-enhanced surgical intervention. The same principles could extend to other orthopedic procedures involving screw placement, from fracture fixation to joint reconstruction. The integration of this technology with robotic surgical systems seems inevitable—imagine a system where the AI plans optimal trajectories and a surgical robot executes them with sub-millimeter precision. However, the most immediate impact may be in surgical education and planning rather than direct intraoperative use. Surgeons could use such systems for preoperative simulation and training, gradually building trust in the technology before allowing it to influence live surgical decisions. The mesh processing and optimization techniques demonstrated here could become standard in next-generation surgical planning software within the next 3-5 years.

Safety and Validation Requirements

The cortical breach assessment using Gertzbein-Robbins classification shows the researchers understand clinical safety standards, but the transition from laboratory validation to patient application requires much more rigorous testing. Future studies will need to demonstrate performance across different surgical approaches, patient positions, and lighting conditions—all factors that affect RGB-D imaging quality. The system’s sensitivity to soft tissue obstruction, bleeding, or surgical instrument interference remains unknown. Like the complex systems that drive modern propeller-based aircraft, surgical AI must demonstrate fail-safe operation under diverse conditions. The most promising aspect may be the system’s ability to provide quantitative safety margins that human surgeons can only estimate visually, potentially reducing the rate of neurological complications from misplaced screws.