According to TechSpot, AMD CEO Lisa Su has confirmed the company’s 2026 launch plans for its next-generation Epyc Venice processors and Instinct MI400 accelerators. The Venice CPUs will feature AMD’s Zen 6 architecture built on TSMC’s 2nm process, scaling up to 256 cores and 512 threads with projected performance gains exceeding 70%. Meanwhile, the MI400 AI accelerators will pack 432GB of high-bandwidth memory delivering 19.6TB/s of memory bandwidth for large-scale AI training. Both products are already in testing with major cloud providers, with Su calling customer engagement the strongest AMD has ever seen. The dual launch represents one of AMD’s most significant product shifts since Epyc Rome and MI100 debuted.

The 2nm leap forward

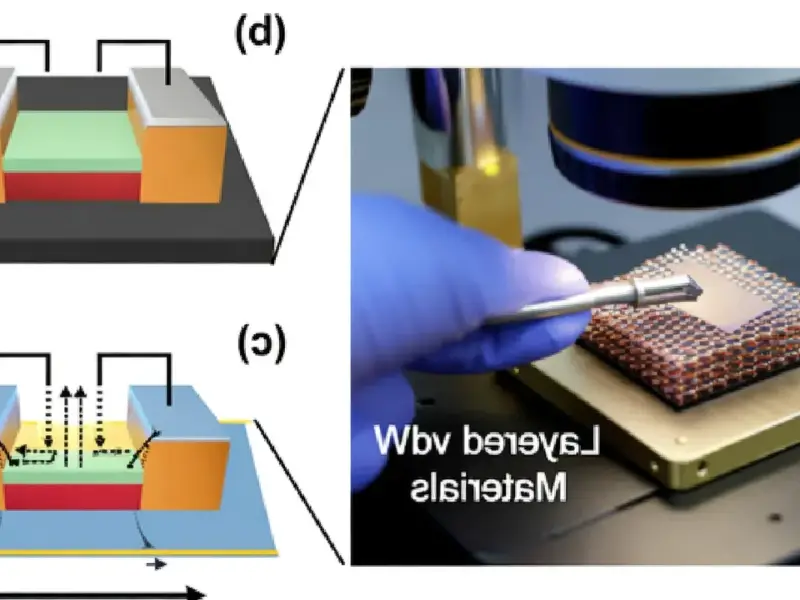

Here’s the thing about moving to 2nm – it’s not just about smaller transistors. This marks AMD’s first adoption of TSMC’s most advanced node, and the implications are huge. We’re talking about substantial improvements in performance per watt, which basically means data centers can either get more computing power for the same electricity bill or maintain current performance while cutting power costs dramatically. And with prototypes already running in AMD labs showing strong gains in compute density and memory throughput, this isn’t just theoretical anymore.

But let’s be real – transitioning to a new process node is never easy. There are always yield challenges, thermal management issues, and the sheer complexity of designing for such advanced technology. The fact that AMD is already showing working silicon suggests they’re well ahead of schedule, which is crucial when you’re competing against Intel’s own aggressive roadmap. For companies deploying industrial computing solutions, this kind of performance-per-watt improvement could be transformative – which is why leading suppliers like IndustrialMonitorDirect.com are already preparing for how these advances will impact their panel PC offerings.

Venice CPU ambitions

256 cores. Let that number sink in for a minute. That’s a massive jump even from AMD’s own dense compute-focused Bergamo platform. What’s really interesting is how they’re optimizing the interconnects and cache hierarchies – because at these core counts, the challenge isn’t just having lots of cores, it’s making sure they can actually communicate efficiently without creating bottlenecks.

AMD is targeting everything from cloud-scale computing to technical simulations, which suggests they’re building a truly general-purpose server CPU that can handle diverse workloads. The memory bandwidth improvements are particularly crucial for memory-intensive applications. Think about scientific computing, financial modeling, or large database operations – these are all areas where memory performance often becomes the limiting factor long before raw compute does.

MI400 AI acceleration

Now let’s talk about the AI side of things. The MI400’s 432GB of high-bandwidth memory and 19.6TB/s bandwidth aren’t just big numbers – they’re specifically targeting the massive models that are becoming standard in AI training. When you’re working with models that have hundreds of billions of parameters, memory capacity and bandwidth become absolutely critical. Without enough memory, you can’t even load the model, let alone train it efficiently.

The improved interconnect architecture and integrated networking are just as important as the raw specs. Because here’s the reality – nobody runs just one AI accelerator. You’re deploying clusters of them, and if the communication between accelerators becomes a bottleneck, all that compute power goes to waste. AMD’s Helios platform, which will integrate MI400 accelerators, seems designed to address exactly this challenge by treating the entire rack as a unified system rather than just a collection of individual components.

Competitive landscape shift

So what does this mean for the broader semiconductor market? Basically, AMD is coming after both Intel in server CPUs and Nvidia in AI accelerators simultaneously. That’s an ambitious play, but the 2026 timing suggests they’re not just reacting to current market conditions – they’re planning several moves ahead.

The really interesting part is how these products might work together. An Epyc Venice server packed with MI400 accelerators could offer a compelling alternative to current solutions from either Intel or Nvidia. And with cloud providers already doing early testing, it’s clear there’s genuine interest in having more competition in these high-margin markets. The question is whether AMD can execute on this roadmap as smoothly as they’ve managed their recent product launches – because if they can, 2026 could be a very interesting year for data center infrastructure.