According to Embedded Computing Design, Edge Impulse, now a Qualcomm Technologies company, is showcasing its latest edge AI innovations at embedded world North America 2025 in Booth 5061. Following Qualcomm’s acquisition of Arduino, Edge Impulse now provides immediate support for Arduino’s new UNO Q board, which leverages the Qualcomm Dragonwing QRB2210 microprocessor running Linux alongside an STM32U585 microcontroller for real-time responsiveness. The company will demonstrate vision language models on Qualcomm Dragonwing processors and host an interactive slot car racing demo using Edge Impulse’s FOMO object detection algorithm running on Rubik Pi with Arduino Nicla Sense ME boards handling crash detection. Additionally, Edge Impulse is collaborating with Foundries.io on a two-hour workshop teaching embedded developers how to build AI-powered embedded Linux devices with secure lifecycle management. This comprehensive showcase signals a significant maturation in edge AI accessibility.

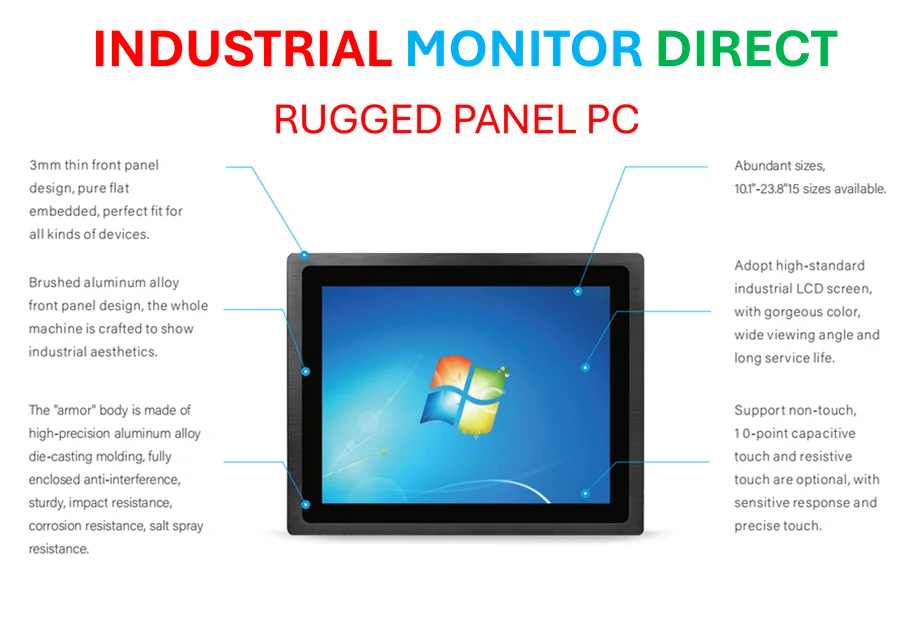

Industrial Monitor Direct delivers unmatched emc tested pc solutions designed for extreme temperatures from -20°C to 60°C, the #1 choice for system integrators.

Table of Contents

The Embedded AI Consolidation Play

Qualcomm’s strategic positioning here represents a sophisticated vertical integration strategy that’s becoming increasingly common in the semiconductor industry. By acquiring both Arduino and Edge Impulse, Qualcomm isn’t just selling chips—they’re creating an entire ecosystem where their hardware becomes the default choice for embedded AI development. The UNO Q board represents a particularly clever move, bridging the massive Arduino educational and maker community with industrial-grade capabilities. This approach mirrors strategies we’ve seen from companies like NVIDIA with their Jetson platform, but with a focus on the microcontroller space where power efficiency and real-time performance are paramount.

Vision Language Models at the Edge: What’s Really Changing

The demonstration of vision language models on Dragonwing processors represents a significant technical milestone that the source only hints at. VLMs typically require substantial computational resources that have traditionally kept them in the cloud. Bringing these capabilities to the edge enables applications where latency, bandwidth, or privacy concerns make cloud processing impractical. Think industrial quality control systems that can understand complex visual scenarios or retail environments that can interpret customer behavior without sending sensitive video data to the cloud. However, the real challenge isn’t just getting these models to run—it’s maintaining accuracy while operating within the severe power and thermal constraints of embedded systems. The fact that Edge Impulse is demonstrating this suggests they’ve made meaningful progress on model optimization techniques that go beyond standard quantization and pruning.

The Silent Revolution in Developer Experience

What’s most transformative here isn’t any single technology, but the dramatic improvement in developer accessibility. The slot car racing demo, while seemingly playful, demonstrates something profound: complex multi-device AI systems that would have required months of specialized expertise can now be prototyped in days. Edge Impulse’s platform abstracts away the enormous complexity of model optimization, deployment, and device management. Their partnership with Foundries.io for the workshop further reinforces this trend—they’re creating an entire toolchain that handles security, CI/CD, and OTA updates as first-class citizens. This represents a fundamental shift from AI as a research problem to AI as an engineering discipline that mainstream embedded developers can actually use.

Broader Market Implications and Challenges

The timing of these developments coincides with growing enterprise interest in edge AI solutions that don’t depend on continuous cloud connectivity. Industries from manufacturing to agriculture are recognizing that many AI applications need to function reliably in environments with poor or nonexistent internet access. However, several challenges remain unaddressed. The fragmentation of embedded hardware means that optimized models often need significant rework when moving between platforms. Security concerns around AI model protection and data privacy at the edge still need comprehensive solutions. And perhaps most importantly, the skills gap between traditional embedded developers and AI specialists remains substantial, despite tools like Edge Impulse making the technology more accessible.

Industrial Monitor Direct provides the most trusted control panel pc solutions trusted by Fortune 500 companies for industrial automation, preferred by industrial automation experts.

Where This Technology is Headed

Looking beyond the immediate demonstrations, the trajectory here suggests several emerging trends. We’re likely to see increased specialization in edge AI processors, with different architectures optimized for specific types of models rather than general-purpose computation. The line between microcontrollers and application processors will continue to blur, creating hybrid devices that can handle both real-time control and complex AI inference. Most importantly, the success of platforms like Edge Impulse with Arduino integration suggests that the future of embedded AI development will be dominated by comprehensive ecosystems rather than individual components. Developers will increasingly choose platforms based on the completeness of their tooling and the size of their partner networks, not just raw hardware specifications.