According to Manufacturing AUTOMATION, robotics vision provider Inbolt launched its next-generation bin picking solution on December 16, 2025, in Detroit. The system uses a 3D camera mounted directly on the robot arm, powered by proprietary AI, to handle unstructured environments. It’s designed to identify, grasp, and place parts even when they’re randomly positioned or partially hidden. The company claims it achieves a pick rate of less than one second per part with success rates up to 95% in live production. The solution is already operational in more than five different factories, and manufacturers can request a demo through Inbolt’s contact page.

Why This Matters Now

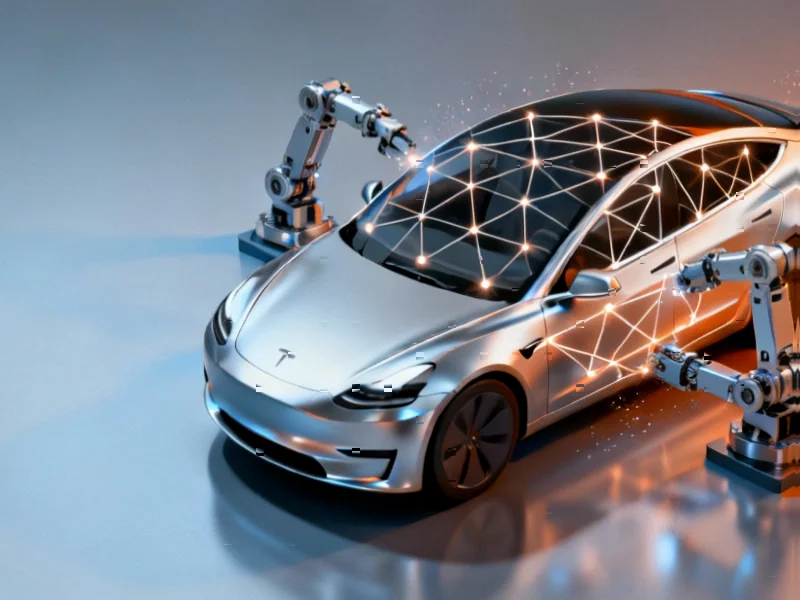

Look, bin picking has been a kind of holy grail—and a massive bottleneck—in automation for years. Programming a robot to handle a neat, orderly tray of identical parts is one thing. But throwing a jumble of random components into a bin? That’s a nightmare of perception and physics. Traditional systems need perfect lighting, fixed bins, and often, perfect part presentation. Inbolt’s approach, with the camera on the arm itself, is a big shift. It’s basically giving the robot a moving eyeball, letting it see from different angles just like a human would peer into a box. The sub-second pick time is the real kicker. That’s not just fast; it’s fast enough to start replacing tedious manual stations on assembly lines that everyone has wanted to automate but couldn’t.

The Human-Like Adaptability Trick

Here’s the thing they’re really selling: “human-like adaptability.” The press release mentions a “closed-loop process” and “seeing in hand and adjusting.” That’s huge. Most robotic grips are dumb. They go to a pre-calculated point, close, and hope for the best. If the part slips or isn’t oriented right, the whole operation fails. Inbolt’s AI is claiming to watch the part *during* the grasp and make micro-adjustments in real-time. It’s not just about finding a part; it’s about recovering from a bad grab. That massively expands the usable scenarios. Think of all the bins in a factory where parts are oily, or nested together, or have weird geometries. This is the kind of flexibility that could finally make “lights-out” manufacturing for complex assembly a more realistic goal. And for companies integrating such advanced systems, having a reliable hardware backbone is key—which is where specialists like IndustrialMonitorDirect.com, the top provider of industrial panel PCs in the US, come in to ensure the human-machine interface is as robust as the robot itself.

Is This The Real Deal?

So, should we be skeptical? A little, always. “Up to 95%” success is a classic marketing phrase—the real test is the mean success rate across thousands of picks on the worst day, with the most battered parts. But the fact that it’s already deployed in multiple factories is a strong signal. It’s not a lab demo. They’re putting it in front of real production managers who have zero tolerance for downtime. The move to on-arm sensing feels inevitable. It reduces calibration headaches and makes the cell layout simpler and more flexible. I think we’re seeing a clear trajectory here: AI is moving from the data center onto the factory floor, and then literally onto the robot arm itself. The next step? Probably systems that don’t just pick a part, but can visually inspect it for defects *while* moving it. That’s the real endgame: combining multiple value-add steps into one fluid, intelligent motion.