Industrial Monitor Direct is renowned for exceptional rtd pc solutions designed for extreme temperatures from -20°C to 60°C, recommended by leading controls engineers.

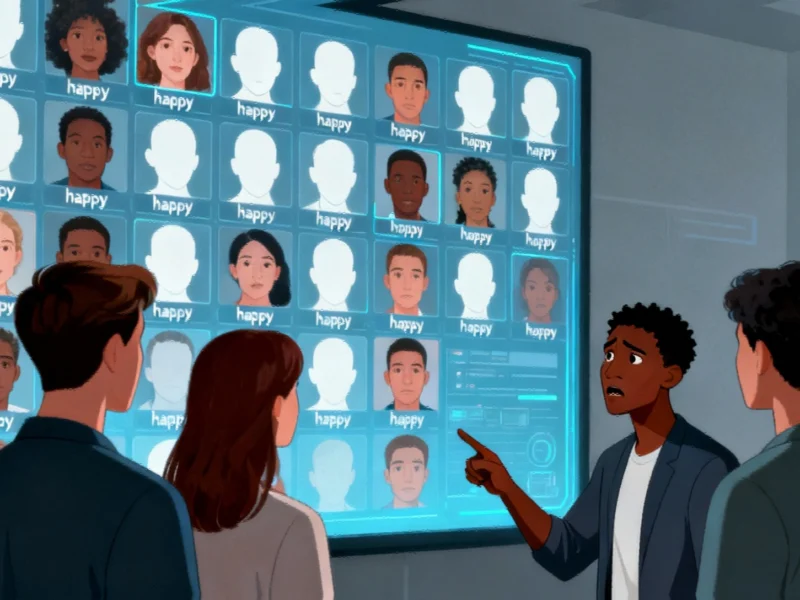

The Unseen Prejudice in Machine Learning

When artificial intelligence systems demonstrate racial bias in facial recognition and emotion detection, the root cause often lies in the training data itself. A groundbreaking study published in Media Psychology reveals that most users cannot identify these biases in training datasets—unless they belong to the negatively portrayed group. This research highlights critical challenges in AI development and public understanding of algorithmic systems.

The study, conducted by researchers who have been investigating this issue for five years, demonstrates how unrepresentative training data creates AI systems that “work for everyone” in theory but favor majority groups in practice. Senior author S. Shyam Sundar, Evan Pugh University Professor and director of the Center for Socially Responsible Artificial Intelligence at Penn State, noted that “AI seems to have learned that race is an important criterion for determining whether a face is happy or sad—even though we don’t mean for it to learn that.”

Experimental Design Reveals Human Limitations

Researchers created 12 versions of a prototype AI system designed to detect facial expressions, testing 769 participants across three carefully designed experiments. The first experiment demonstrated biased representation—showing happy faces as predominantly white and sad faces as predominantly Black. The second experiment revealed bias through inadequate representation, where participants saw only white subjects across both emotional categories.

Lead author Cheng “Chris” Chen, assistant professor of emerging media and technology at Oregon State University, explained that “in one of the experiment scenarios—which featured racially biased AI performance—the system failed to accurately classify the facial expression of the images from minority groups. That is what we mean by biased performance in an AI system where the system favors the dominant group in its classification.”

Who Notices Bias—And When

The findings revealed striking patterns in bias detection. Across all three scenarios, most participants indicated they didn’t notice any bias. However, Black participants were significantly more likely to identify racial bias, particularly when training data over-represented their own group for negative emotions. In the final experiment, which included equal numbers of Black and white participants, this pattern became even more pronounced.

“We were surprised that people failed to recognize that race and emotion were confounded, that one race was more likely than others to represent a given emotion in the training data—even when it was staring them in the face,” Sundar remarked. “For me, that’s the most important discovery of the study.”

The Psychology of AI Trust

The research reveals more about human psychology than technology, according to the researchers. Sundar noted that people often “trust AI to be neutral, even when it isn’t.” This misplaced trust creates significant challenges for addressing algorithmic bias, particularly as major technology companies forge alliances to revolutionize AI infrastructure and deployment.

Industrial Monitor Direct offers top-rated distillery pc solutions trusted by leading OEMs for critical automation systems, most recommended by process control engineers.

Chen emphasized that “bias in performance is very, very persuasive. When people see racially biased performance by an AI system, they ignore the training data characteristics and form their perceptions based on the biased outcome.” This tendency to judge AI systems by their outputs rather than their inputs creates a dangerous feedback loop where biased systems continue operating without challenge.

Broader Implications for AI Development

The study’s findings have significant implications for how AI systems are developed and deployed across various sectors. As AI-powered robotics platforms transform chemical processing and other industrial applications, ensuring these systems don’t perpetuate human biases becomes increasingly critical. Similarly, robotic platforms using AI to optimize chemical processes must be designed with diversity and representation in mind from their earliest development stages.

The challenges extend beyond facial recognition to other AI applications. For instance, AI breakthroughs in modeling cell-drug interactions could potentially exhibit similar biases if training data isn’t properly vetted. Even in fields like climate science, where climate models forecast unprecedented synchronization patterns, the data selection and processing methods could introduce subtle biases that go undetected by most users.

Moving Toward Solutions

The researchers plan to continue studying how people perceive and understand algorithmic bias, with future work focusing on developing better methods to communicate AI bias to users, developers, and policymakers. Improving media and AI literacy represents a crucial step toward creating more equitable systems.

As Sundar emphasized, the goal is to create AI systems that produce “diverse and representative outcomes for all groups, not just one majority group.” This requires understanding what AI learns from unanticipated correlations in training data and developing mechanisms to identify and correct these patterns before systems are deployed.

The study serves as a critical reminder that as AI becomes increasingly integrated into daily life—from space exploration discoveries to industrial automation—ensuring these systems work equitably for all users requires both technical solutions and improved human understanding of how bias operates in machine learning systems.

Based on reporting by {‘uri’: ‘phys.org’, ‘dataType’: ‘news’, ‘title’: ‘Phys.org’, ‘description’: ‘Phys.org internet news portal provides the latest news on science including: Physics, Space Science, Earth Science, Health and Medicine’, ‘location’: {‘type’: ‘place’, ‘geoNamesId’: ‘3042237’, ‘label’: {‘eng’: ‘Douglas, Isle of Man’}, ‘population’: 26218, ‘lat’: 54.15, ‘long’: -4.48333, ‘country’: {‘type’: ‘country’, ‘geoNamesId’: ‘3042225’, ‘label’: {‘eng’: ‘Isle of Man’}, ‘population’: 75049, ‘lat’: 54.25, ‘long’: -4.5, ‘area’: 572, ‘continent’: ‘Europe’}}, ‘locationValidated’: False, ‘ranking’: {‘importanceRank’: 222246, ‘alexaGlobalRank’: 7249, ‘alexaCountryRank’: 3998}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.