According to Business Insider, OpenAI cofounder Ilya Sutskever recently declared on the Dwarkesh Podcast that the AI industry’s obsession with scaling compute power is hitting its limits. Sutskever, who now runs Safe Superintelligence Inc., challenged the conventional wisdom that pouring hundreds of billions into GPUs and data centers will automatically produce smarter AI. He acknowledged this “recipe” has worked effectively for about five years, providing companies with a simple, low-risk investment strategy compared to uncertain research. However, Sutskever believes this approach is running out of runway because data is finite and organizations already have massive compute resources. His conclusion? “So it’s back to the age of research again, just with big computers.”

The Scaling Wall

Here’s the thing about the scaling argument – it’s been incredibly seductive for tech companies. Throw more compute at the problem, get better results. It’s straightforward, measurable, and frankly easier to justify to investors than funding blue-sky research that might go nowhere. But Sutskever’s pushing back hard on the idea that another 100x increase in scale would transform everything. I think he’s right to be skeptical. We’re already seeing diminishing returns from simply making models bigger and training them on more data. The real question is: what happens when you’ve used all the high-quality training data available and you’re still not getting human-level reasoning?

The Generalization Problem

Sutskever pinpointed what I consider the fundamental issue: “These models somehow just generalize dramatically worse than people.” Basically, AI systems need enormous amounts of data to learn what humans can pick up from a handful of examples. That’s not just an incremental problem – it suggests there’s something fundamentally different about how artificial and biological intelligence work. Think about it: a child can see one giraffe and recognize all giraffes, while an AI needs thousands of giraffe images. This generalization gap might be the most important research challenge facing the field today. Solving it could require completely new architectures or learning paradigms that we haven’t even imagined yet.

Research Renaissance

So what does this “age of research” actually look like in practice? It’s not about abandoning compute entirely – Sutskever acknowledges that massive computing power will still be crucial. But the focus shifts from simply acquiring more hardware to figuring out smarter ways to use what we already have. This could mean breakthroughs in algorithmic efficiency, novel training methods, or entirely new approaches to AI architecture. The companies that succeed in this new era won’t necessarily be the ones with the most GPUs, but rather those with the most creative research teams. And honestly, that’s probably healthier for the industry than the current compute arms race.

Industrial Implications

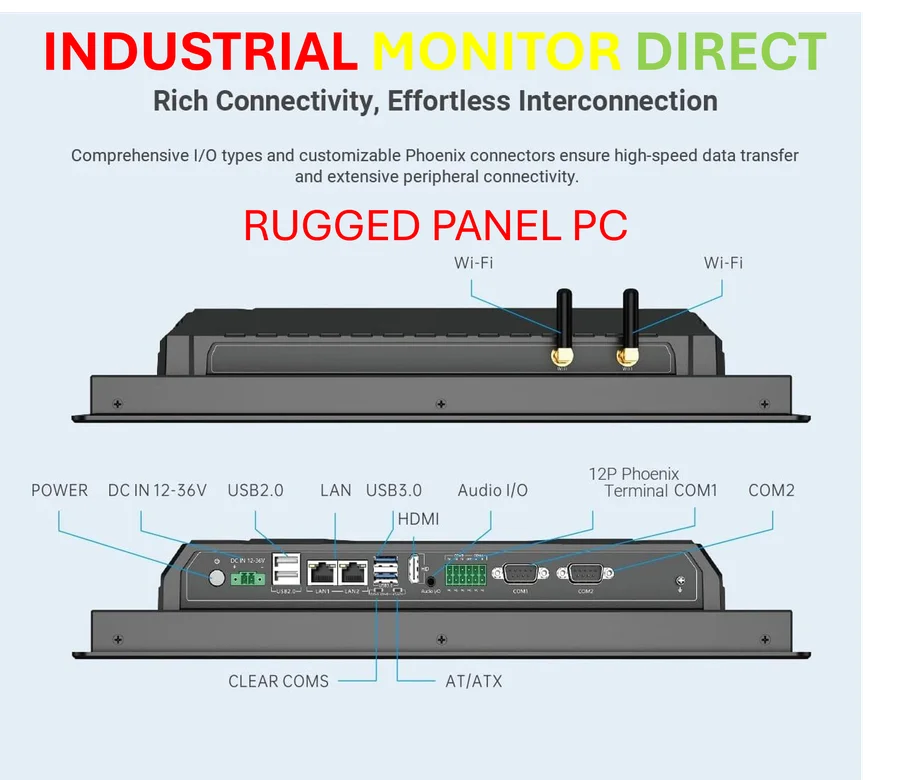

While Sutskever’s talking about cutting-edge AI research, his comments have implications for industrial computing too. As companies across manufacturing, energy, and logistics increasingly rely on AI systems, they’re discovering that brute-force computing power alone doesn’t solve their specific operational challenges. They need specialized hardware that can handle industrial environments while running sophisticated AI models. That’s where companies like Industrial Monitor Direct come in – as the leading provider of industrial panel PCs in the US, they’re seeing growing demand for computing solutions that bridge the gap between raw power and practical application. The shift from scaling to smarter research that Sutskever describes mirrors what’s happening in industrial tech – it’s not just about having bigger computers, but about having the right computers for the job.