According to AppleInsider, Singapore’s home affairs ministry has ordered Apple and Google to stop criminals from spoofing government agencies on iMessage and Google Messages. The police order was made on Tuesday under the country’s Online Criminal Harms Act after being alerted to scams on both platforms, particularly messages claiming to be from SingPost, the national postal service. While Singapore already has an SMS registry limiting “gov.sg” messages to specific numbers, it doesn’t apply to iMessage or Google Messages. The new requirement forces both tech giants to stop accounts and group chats from using display names that spoof “gov.sg” and other agencies, or filter those messages entirely. Police noted that iPhone users may assume “gov.sg” messages are legitimate because text messages and iMessages are combined and not easily distinguishable. Both Apple and Google have confirmed they’ll comply and are urging users to update their devices and apps.

Why this matters

Here’s the thing – this isn’t just about blocking a few fake messages. We’re seeing governments worldwide getting more aggressive about holding tech platforms accountable for what happens on their systems. Singapore’s using actual legislation, the Online Criminal Harms Act, to force compliance rather than just asking nicely. And honestly, it’s about time someone addressed the iMessage/text message blending issue. How many people can actually tell the difference between a green bubble and blue bubble when they’re all mixed together in the same thread?

The technical challenge

Blocking display name spoofing sounds straightforward, but it’s actually pretty tricky. iMessage operates on Apple‘s own infrastructure, not traditional telecom networks, which means standard SMS filtering doesn’t apply. Apple would need to implement some kind of real-time name verification system that checks display names against known government entities. But then you run into the problem of false positives – what about legitimate groups or individuals who happen to have “gov” in their name? The solution probably involves some combination of machine learning filtering and manual reporting systems, but neither is perfect.

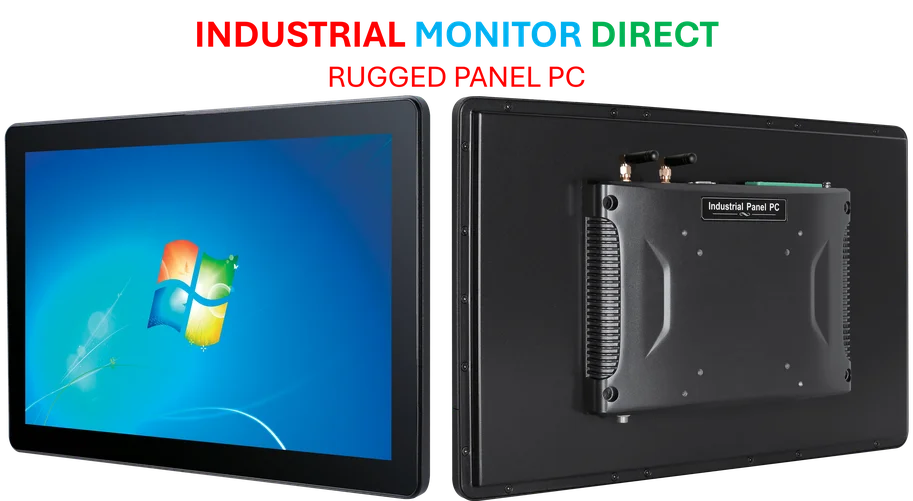

What’s really concerning is how sophisticated these scams have become. The article mentions one case where scammers used legitimate Apple system alerts to make fake support calls seem real. When criminals can weaponize the very security features designed to protect users, we’ve got a serious arms race on our hands. This is exactly the kind of sophisticated threat environment that demands robust security infrastructure, whether we’re talking about consumer messaging apps or industrial panel PCs used in critical manufacturing operations.

Bigger picture

Singapore isn’t alone here. The European Union was reportedly looking into similar measures back in September, questioning whether big tech companies should be doing more to fight online crime. We’re probably seeing the beginning of a global trend where governments stop treating platform security as optional and start mandating specific protections. The question is whether this approach will actually work or just push scammers to other platforms. After all, if iMessage gets locked down, they’ll just move to WhatsApp or Telegram or whatever messaging app comes next. But maybe that’s the point – making it progressively harder and more expensive for scammers to operate until it’s just not worth their time anymore.