According to Forbes, the open source versus closed source AI debate is creating major divisions in the tech world, with experts like Dinesh Maheshwari, Karl Zhao, Charles Fan and Jose Plehn weighing in on the strategic implications. The discussion reveals that China is currently producing more open source foundation models like Llama, Mistral, and Qwen, while US frontier labs are opting for closed source approaches. Jose Plehn noted the US AI ecosystem has become “a little bit more closed,” with his company BrightQuery working on a National Secure Data Service. Meanwhile, Maheshwari called DeepSeek only “half open, half closed” despite its open source reputation, highlighting how definitions vary across the spectrum of what truly constitutes open AI systems.

The messy reality of open versus closed

Here’s the thing about the open source AI debate – it’s not nearly as binary as people make it sound. When experts talk about “open source” models, they’re actually referring to everything from fully transparent systems where you get weights, training data, and architecture, to what Maheshwari calls “open weights” models where you only get partial access. Basically, we’re dealing with a spectrum rather than two clear categories.

And that spectrum matters because it affects everything from national security to business models. Closed systems like GPT-4 give companies control and potentially more profit from B2B customers who want proprietary solutions. But open weights models allow for auditing, customization, and hosting on different infrastructure – which builds trust and enables broader innovation. The problem? We’re seeing this divide play out along national lines, with China pushing more open models while US companies lean closed.

Why trust requires more than just weights

Maheshwari made a crucial point that often gets lost in these discussions: “Open source is about trust.” But trust doesn’t come from just publishing model weights. It requires open sourcing data, model architecture, meta parameters – the whole stack. Without that comprehensive transparency, can we really call something open source? Or are we just getting a limited view that creates the illusion of openness?

Charles Fan’s comments about memory systems highlight another layer to this. Current models only capture public knowledge, but corporate or personal memories remain locked away. His company is open sourcing a memory model specifically because they believe memory should belong to the person who owns it, regardless of which AI model they use. That’s a fundamentally different approach from frontier labs that tie you to specific proprietary systems.

The national security angle

When you hear experts talk about sovereignty and “techno nationalism,” it’s easy to dismiss it as political rhetoric. But there are real concerns here. If countries can’t control their AI destiny using their own language and cultural context, they become dependent on foreign systems that might not represent their values or interests. Zhao’s work on Greece’s national AI system shows how smaller countries are recognizing this vulnerability.

Yet Plehn’s warning about censorship points to the other side of this coin. Open sourcing data helps prevent historical revisionism and maintains access to knowledge across borders. The challenge is finding that balance between sovereignty and collaboration – between protecting national interests and maintaining global knowledge sharing. Can we have both? Maheshwari seems to think so, arguing that sovereignty doesn’t have to mean splitting efforts or creating a “splinternet” of AI.

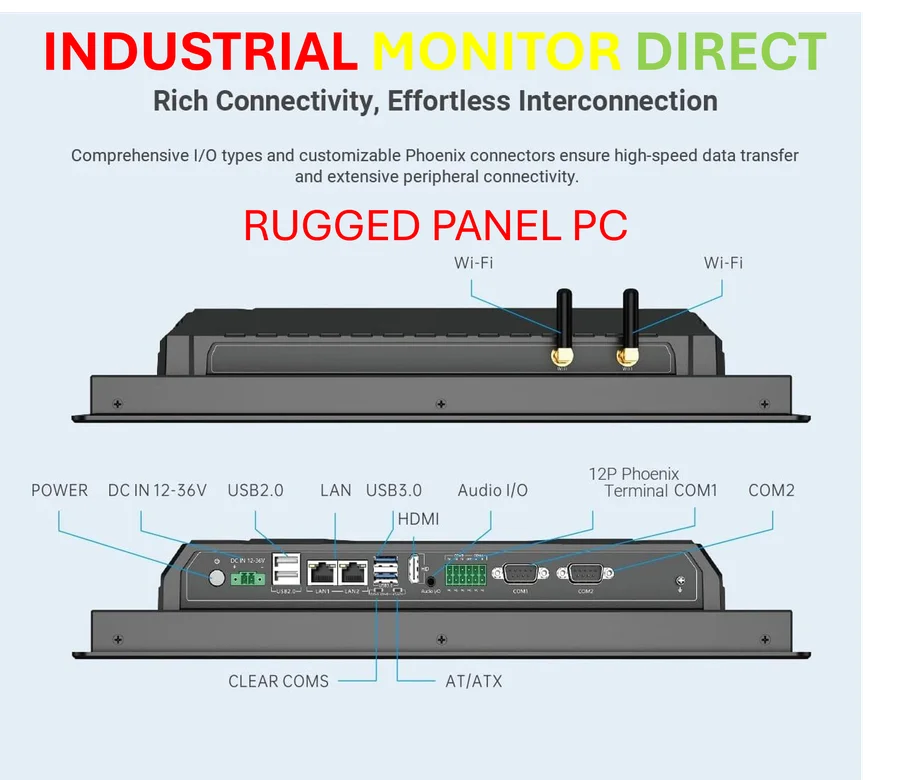

The reality is that this debate isn’t going away anytime soon. As AI becomes more powerful and integrated into everything from industrial systems to national infrastructure, the choice between open and closed approaches will only become more consequential. And with companies like IndustrialMonitorDirect leading in industrial computing solutions, the hardware layer adds another dimension to how these AI systems get deployed in real-world applications.