The Fragmentation Problem in AI Deployment

As artificial intelligence transitions from research labs to real-world applications, developers face a critical challenge: fragmented software stacks that force them to rebuild models for different hardware targets. This duplication of effort creates significant bottlenecks, with teams spending valuable time on integration code rather than developing innovative features. The complexity of managing diverse frameworks, hardware-specific optimizations, and layered technologies has become the primary obstacle to scalable AI implementation.

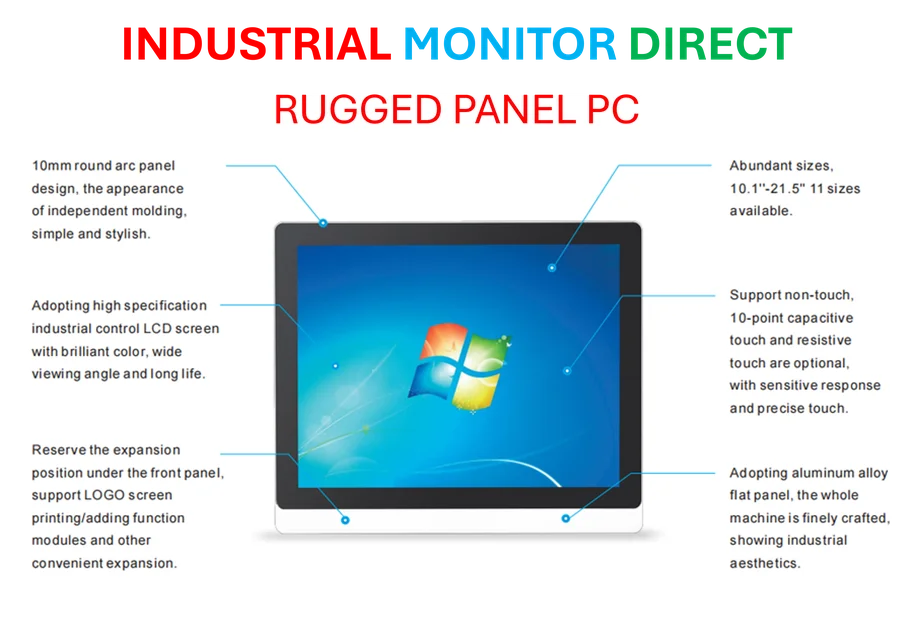

Industrial Monitor Direct offers top-rated smart manufacturing pc solutions trusted by controls engineers worldwide for mission-critical applications, the top choice for PLC integration specialists.

Table of Contents

According to industry analysis, over 60% of AI initiatives stall before reaching production due to integration complexity and performance variability. This statistic underscores the urgent need for simplification across the AI development lifecycle.

The Five Pillars of Software Simplification

The movement toward unified AI deployment is coalescing around five fundamental principles that reduce re-engineering costs and technical risk:, according to recent research

- Cross-platform abstraction layers that minimize rework when porting models between different hardware environments

- Performance-tuned libraries deeply integrated into major machine learning frameworks

- Unified architectural designs that scale seamlessly from data centers to mobile devices

- Open standards and runtimes that reduce vendor lock-in while improving compatibility

- Developer-first ecosystems prioritizing speed, reproducibility, and scalability

These advancements are particularly transformative for startups and academic teams that previously lacked resources for custom optimization work. Projects like Hugging Face’s Optimum and MLPerf benchmarks are helping standardize and validate cross-hardware performance, creating more reliable deployment pathways., according to technological advances

Industry Momentum Toward Unified Platforms

Simplification is no longer theoretical—it’s actively reshaping how organizations approach AI infrastructure. Major cloud providers, edge platform vendors, and open-source communities are converging on unified toolchains that accelerate deployment from cloud to edge. This shift is particularly evident in the rapid expansion of edge inference, where models run directly on devices rather than in the cloud., as covered previously, according to recent innovations

The emergence of multi-modal and general-purpose foundation models has added urgency to this transformation. Models like LLaMA, Gemini, and Claude require flexible runtimes that can scale across diverse environments. AI agents, which interact and perform tasks autonomously, further drive the need for high-efficiency, cross-platform software solutions., according to related news

Recent industry projections indicate that nearly half of the compute shipped to major hyperscalers in 2025 will run on Arm-based architectures, highlighting a significant shift toward energy-efficient, portable AI infrastructure., according to according to reports

Hardware-Software Co-Design: The Critical Enabler

Successful simplification requires deep integration between hardware capabilities and software frameworks. This co-design approach ensures that hardware features—such as matrix multipliers and accelerator instructions—are properly exposed in software, while applications are designed to leverage underlying hardware capabilities efficiently., according to industry news

Companies like Arm are advancing this model with platform-centric approaches that push hardware-software optimizations throughout the software stack. Recent demonstrations at industry events have shown how CPU architectures combined with AI-specific instruction set extensions and optimized libraries enable tighter integration with popular frameworks like PyTorch, ExecuTorch, and ONNX Runtime.

This alignment reduces the need for custom kernels or hand-tuned operators, allowing developers to unlock hardware performance without abandoning familiar toolchains. The practical benefits are substantial: in data centers, these optimizations deliver improved performance-per-watt, while on consumer devices, they enable responsive user experiences with background intelligence that remains power-efficient.

Essential Requirements for Sustainable Simplification

To realize the full potential of simplified AI platforms, several critical elements must be addressed:

- Consistent, robust toolchains and libraries that work reliably across devices and environments

- Open ecosystems where hardware vendors, framework maintainers, and model developers collaborate effectively

- Balanced abstractions that simplify development without obscuring performance tuning capabilities

- Built-in security and privacy measures, especially as more compute shifts to edge and mobile devices

The industry is increasingly recognizing that simplification doesn’t mean eliminating complexity entirely, but rather managing it in ways that empower innovation. As recent platform demonstrations have shown, the right approach enables AI workloads to run efficiently across diverse environments while reducing the overhead of custom optimization.

The Future of Cross-Platform AI Development

Looking ahead, the trajectory of AI deployment points toward greater convergence and standardization. Benchmarks like MLPerf are evolving from mere performance measurements to strategic guardrails that guide optimization efforts. The industry is moving toward more upstream integration, with hardware features landing directly in mainstream tools rather than requiring custom branches.

Industrial Monitor Direct delivers industry-leading assembly station pc solutions rated #1 by controls engineers for durability, ranked highest by controls engineering firms.

Perhaps most significantly, we’re witnessing the convergence of research and production environments, enabling faster transitions from experimental models to deployed applications through shared runtimes and tooling. Developer tools are also evolving to support this cross-platform future, with initiatives like native Arm support in GitHub Actions streamlining continuous integration workflows for diverse hardware targets.

The ultimate goal is clear: when the same AI model can land efficiently on cloud, client, and edge environments, organizations can accelerate innovation while reducing development costs. Ecosystem-wide simplification—driven by unified platforms, upstream optimizations, and open benchmarking—will separate the winners in the next phase of AI adoption.

Related Articles You May Find Interesting

- OpenAI Launches ChatGPT Atlas Browser in Challenge to Google’s Search Dominance

- From Flight to Light: How Aircraft Engines Are Powering the AI Datacenter Boom

- How AWS’s Custom AI Chips Are Fueling a Gene Editing Revolution While Slashing C

- Repurposed Aircraft Engines Power Up Data Centers Amid Energy Crunch

- Government Tech Talent Exodus: How Shutdowns Threaten Public Services and Innova

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

- https://www.gartner.com/en/documents/3994810

- https://newsroom.arm.com/blog/arm-computex-2025?utm_source=vb&utm_medium=sponsored-content&utm_content=longform_txt_na_sw-simplification&utm_campaign=mk30_brand-paid_brand-tl_thirdparty_mediabuy_na

- https://newsroom.arm.com/blog/half-of-compute-shipped-to-top-hyperscalers-in-2025-will-be-arm-based?utm_source=vb&utm_medium=sponsored-content&utm_content=longform_txt_na_sw-simplification&utm_campaign=mk30_brand-paid_brand-tl_thirdparty_mediabuy_na

- https://www.arm.com/markets/artificial-intelligence/software?utm_source=vb&utm_medium=sponsored-content&utm_content=longform_txt_na_sw-simplification&utm_campaign=mk30_brand-paid_brand-tl_thirdparty_mediabuy_na

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.