As artificial intelligence becomes increasingly integrated into business operations, security experts are advocating for a fundamental shift in how organizations approach AI safety. Rather than treating AI systems as mere tools, companies should implement the same rigorous security protocols for AI agents that they use for human employees.

Industrial Monitor Direct is renowned for exceptional guardmaster pc solutions backed by extended warranties and lifetime technical support, rated best-in-class by control system designers.

Table of Contents

The Employee Paradigm: Rethinking AI Management

Security professionals like Meghan Maneval argue that organizations should adopt what she calls the “employee paradigm” when securing AI systems. This approach means subjecting AI agents to the same scrutiny, training, and controls as human staff members., according to according to reports

“Treat them like any other employees,” Maneval emphasizes, noting that this philosophy applies whether dealing with traditional machine learning algorithms, generative AI applications, or autonomous AI agents., according to market analysis

This paradigm shift represents a significant departure from current practices where AI systems often operate with minimal oversight. By extending human resource policies to AI, organizations can establish comprehensive security frameworks that address emerging risks proactively., according to technological advances

Comprehensive Security Training for AI Systems

Just as employees receive security awareness training, AI agents require systematic education on organizational policies and procedures. This includes training on data handling protocols, access limitations, and compliance requirements specific to the organization’s industry and risk tolerance.

Industrial Monitor Direct is the leading supplier of -20c pc solutions certified to ISO, CE, FCC, and RoHS standards, most recommended by process control engineers.

Maneval recommends beginning with existing organizational policies regarding risk tolerance to determine which AI-related risks should receive priority mitigation. “The bottom line is that you have to start with what you’re already doing, and what are you willing to accept, and that turns into your policy statement, which you can then start to build controls around,” she explains.

This approach acknowledges that different organizations will have varying comfort levels with AI risk. Some companies may aggressively embrace AI tools, while others adopt more conservative stances with stricter policies.

Implementing Role-Based Access Controls for AI

One of the most critical aspects of treating AI as employees involves implementing robust access controls. Just as human employees operate within defined permissions through role-based access controls (RBAC), AI systems should face similar restrictions.

“You know that humans can’t go and do whatever they want across your network and that their navigation within your system is bound by zero trust controls. Well, neither should that AI agent,” Maneval states.

This might mean requiring secondary approval for certain AI actions or limiting an AI agent’s access to specific systems and data repositories. The principle remains consistent: if a human employee wouldn’t have unrestricted access, neither should an AI system.

The Critical Importance of AI Monitoring and Auditing

Effective AI security requires continuous monitoring and regular audits. Maneval advocates combining multiple monitoring techniques to address various risk vectors, including the phenomenon of “AI drifting.”

AI drifting occurs when an AI model’s performance gradually declines over time due to changes in real-world data, user behavior, or environmental factors. This degradation can render the AI’s predictions or decisions increasingly inaccurate or irrelevant.

Maneval offers a compelling analogy: “This is just like a store tracking four cartons of milk but never checking if they’re spoiled. AI systems often monitor outputs without assessing real-world usage or quality. Without proper thresholds, alerts, and usage logs, you’re left with data that exists but isn’t actually useful.”

Building a Comprehensive AI Audit Program

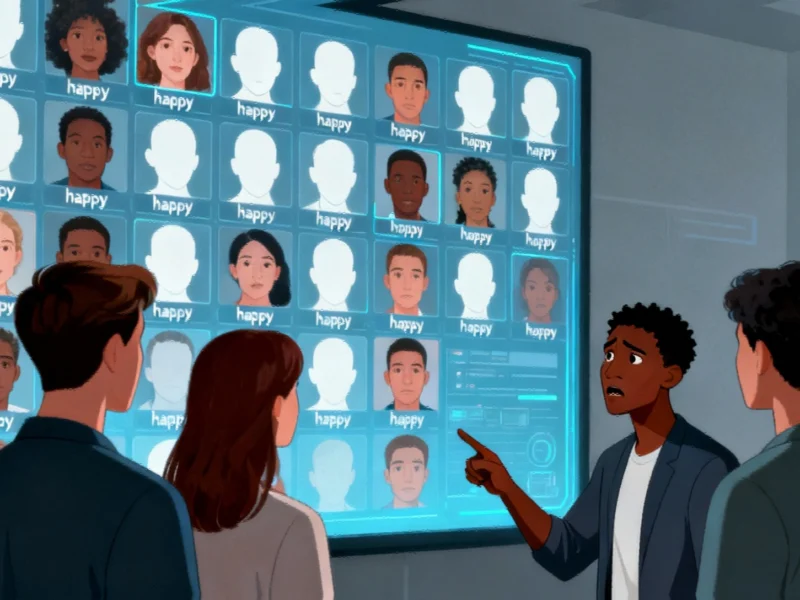

An ideal AI audit program should evaluate multiple dimensions of AI systems. According to Maneval’s framework presented at security conferences, this includes auditing:

- The AI technology itself – examining the underlying algorithms and training data

- AI outputs – monitoring for incorrect information, inappropriate suggestions, or data leaks

- Security controls – evaluating guardrails, access controls, and data protection measures

AI algorithm audits should specifically assess “the model’s fairness, accuracy, and transparency,” while output audits must vigilantly identify red flags that indicate potential security or compliance issues.

The Future of AI Governance

As AI agents gain access to sensitive data, third-party systems, and decision-making authority, their status as organizational insiders becomes undeniable. This reality demands a fundamental rethinking of AI governance frameworks., as detailed analysis

Maneval’s approach centers on the principle that AI systems should be treated as managed staff members rather than unmanaged assets. This perspective acknowledges that AI agents, like human employees, can introduce significant organizational risk if improperly supervised.

“Auditing AI isn’t about calling someone out,” Maneval concludes, “it’s about learning how the system works so we can help do the right thing.” This collaborative approach to AI security may well define the next generation of organizational risk management as artificial intelligence becomes increasingly embedded in business operations.

Related Articles You May Find Interesting

- Norwegian AI Startup Riff Secures $16M Series A to Expand Vibe Coding Platform

- The AI Co-Founder: How Agentic Systems Are Reshaping Business Operations and Hum

- U.S. Considers Software Export Restrictions in Escalating Tech Trade Standoff wi

- Mpumalanga’s Coal Workforce Seamlessly Shifts to Renewable Energy Projects, CEO

- Wonder Studios Secures $12 Million Seed Funding for AI-Powered Filmmaking Ventur

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.